Special thanks to Thomas Sauerer for his great support!

One important note upfront: The here shown integration with Aria Automation is primarily for demo purposes showing the capabilities of the platform rather than for production use. In the upcoming release Broadcom will focus on a more comprehensive integration with Aria Automation Cloud Consumption Interface.

Building a Private Cloud requires more than just a virtualization layer. In fact, besides several other functionalities it requires first and foremost self-service capabilities and a rich service catalog that meets its consumer’s needs. VMware Cloud Foundation with the Aria Automation stack serves those needs. Where in the past a lot of our customers put their focus on IaaS services, more and more people have built-up PaaS services like databases using custom scripts and workflows.

To reduce customer’s operational challenges, VMware by Broadcom has started to offer Database-as-a-Service functionalities which are delivered by the Data Services Manager. While DSM can run standalone with a vSphere environment it includes an Aria Automation integration which automatically exposes its services in the Catalog of the Service Broker.

This blog explains how to set up DSM for exactly this purpose.

The official documentation provides another level of detail that should help getting it setup.

Deployment of the appliance

On the download page of DSM (version 2.0.3 in this case) there are multiple file downloads. First you need the “Provider virtual appliance”.

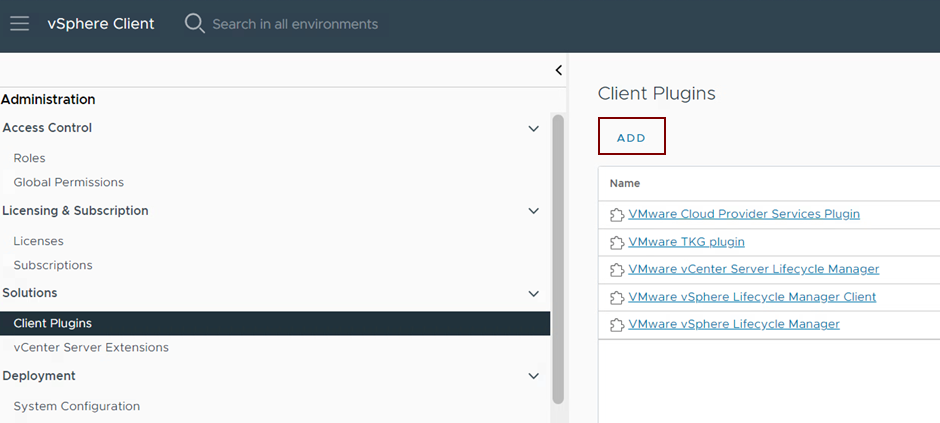

DSM includes a vCenter plugin which is automatically installed once the appliance has been deployed. This however requires that the OVA deployment process is invoked from the “Client Plugins” menu in vCenter.

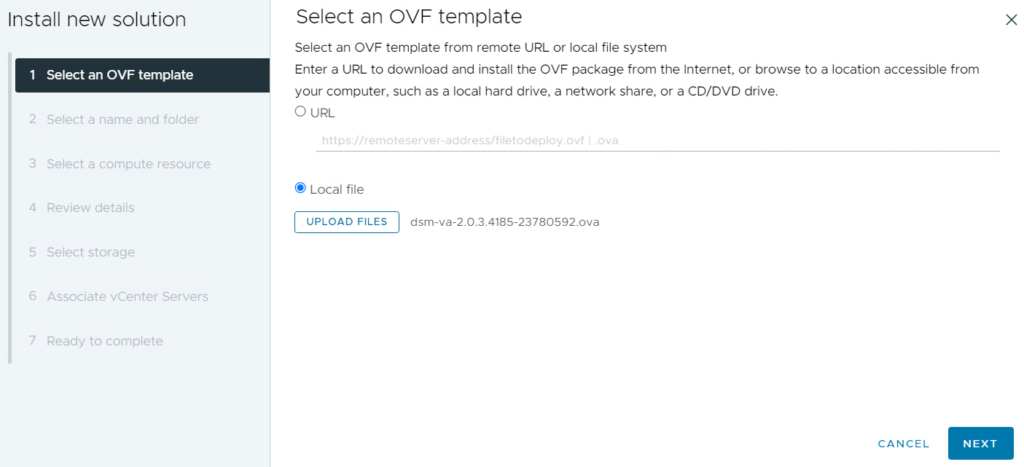

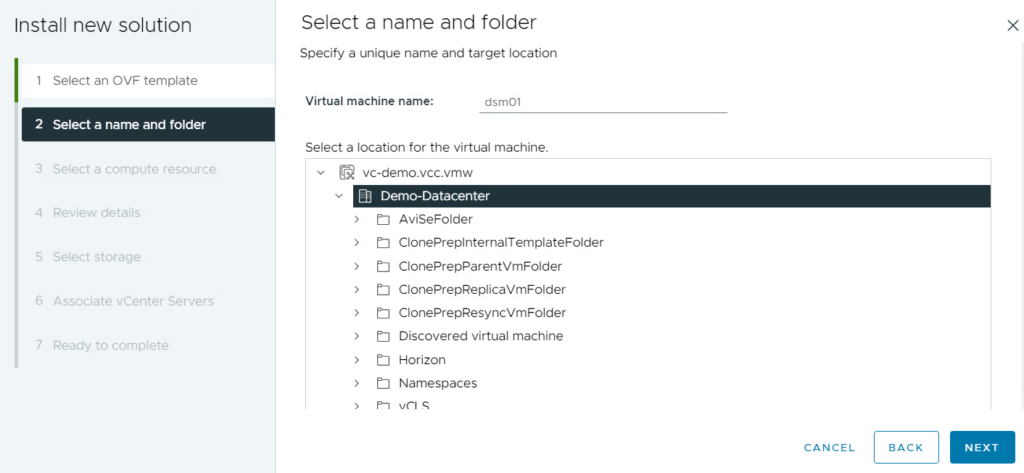

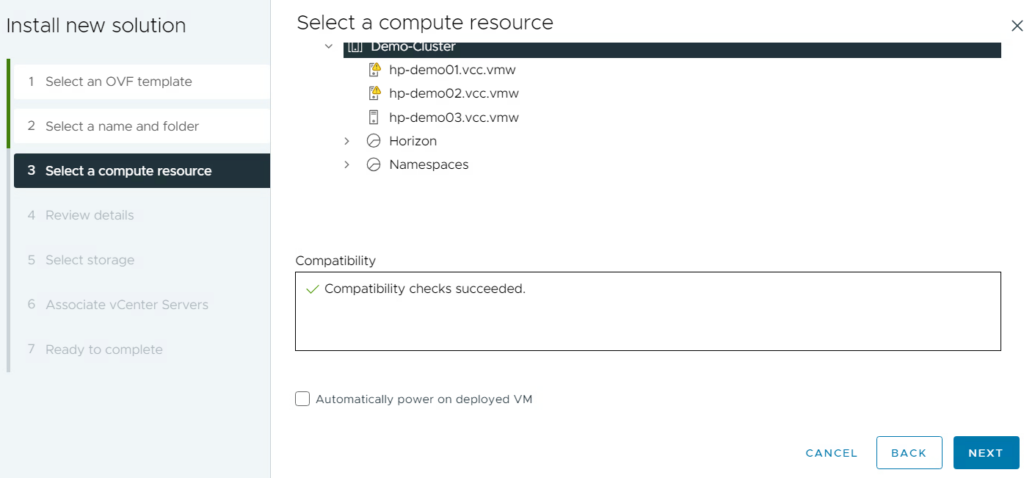

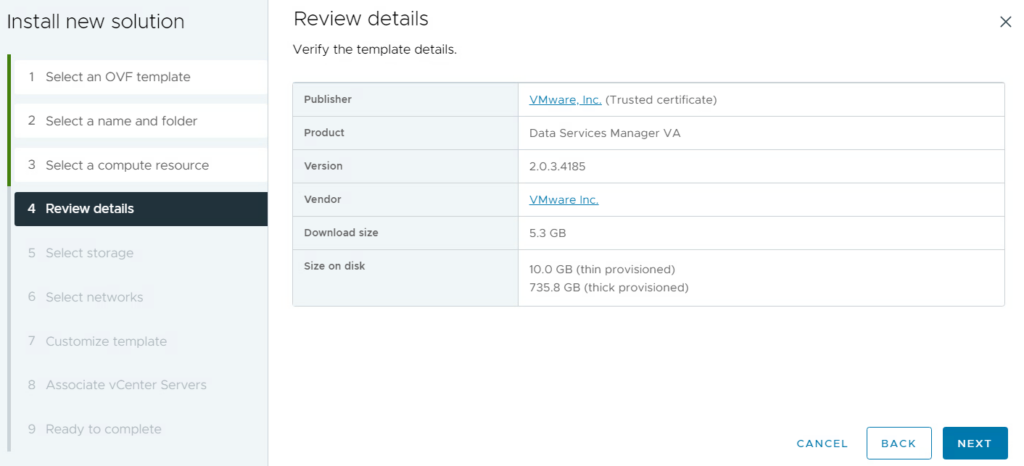

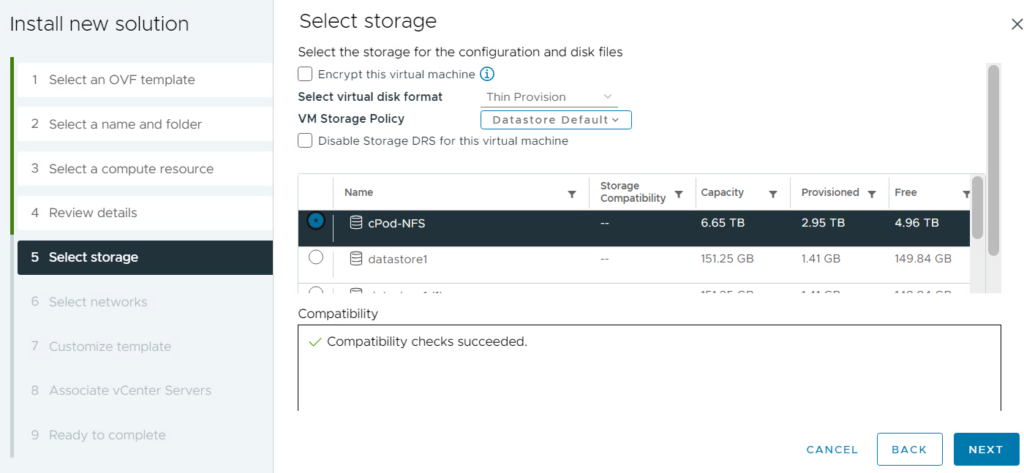

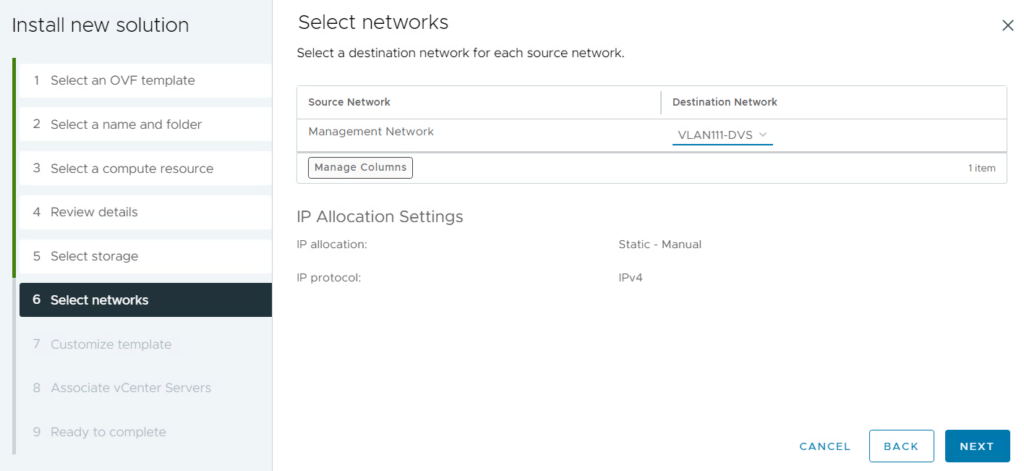

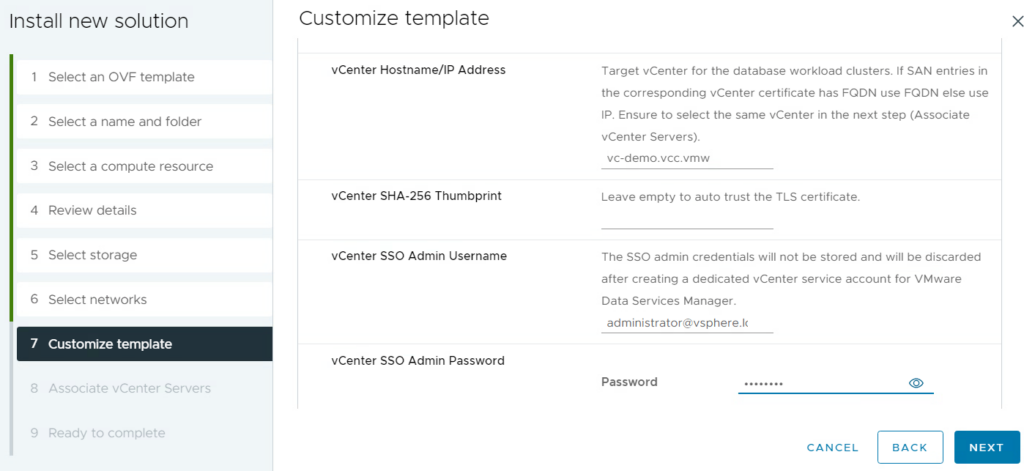

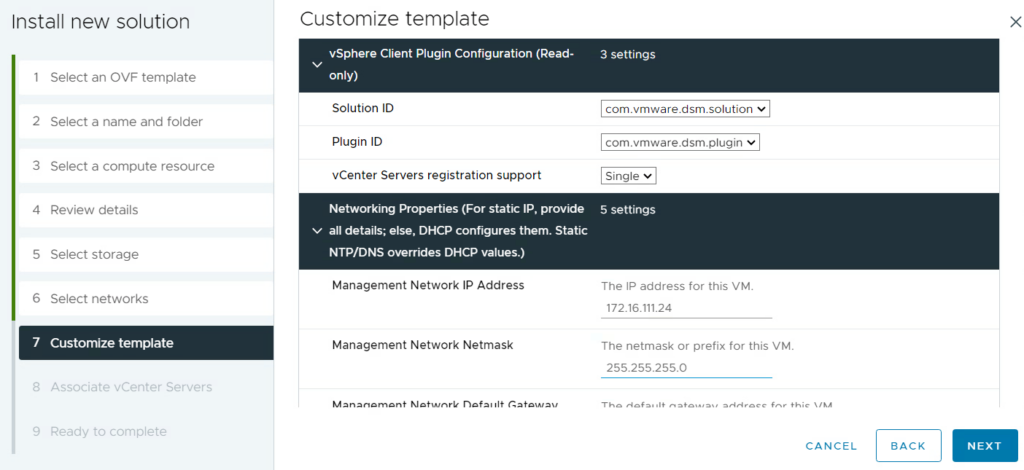

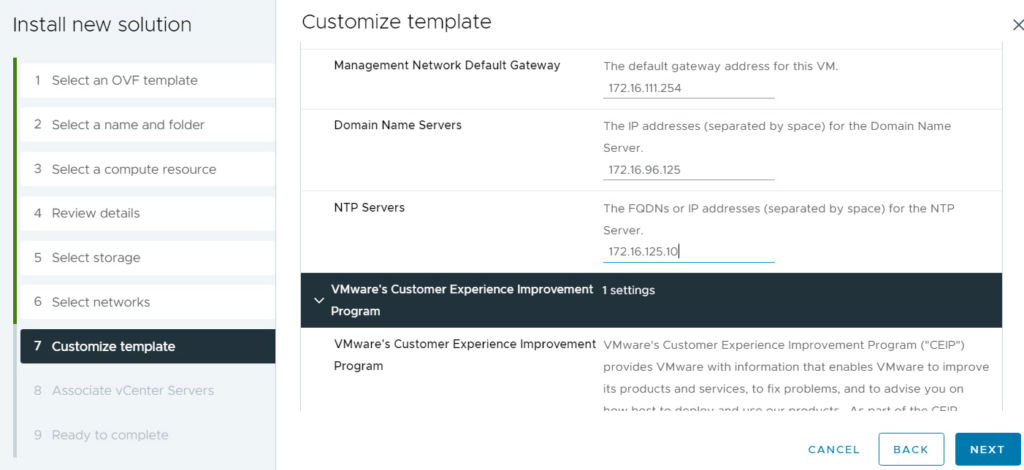

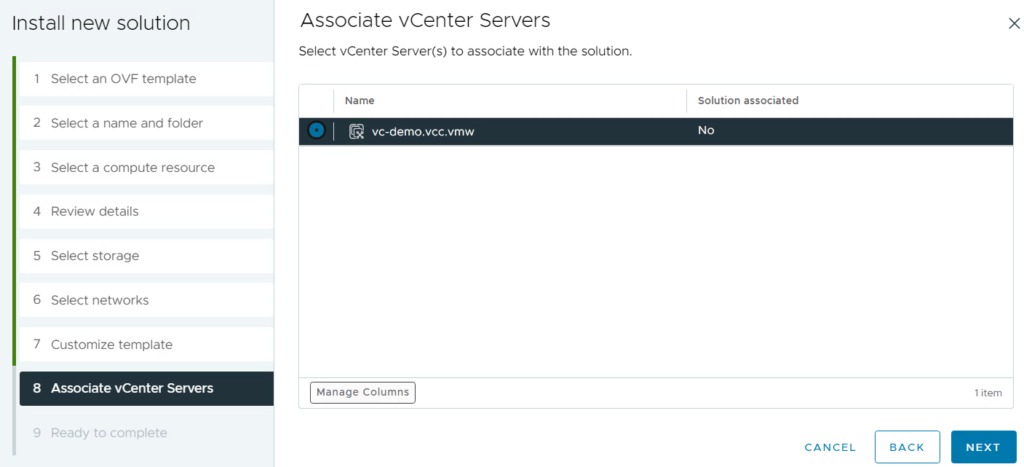

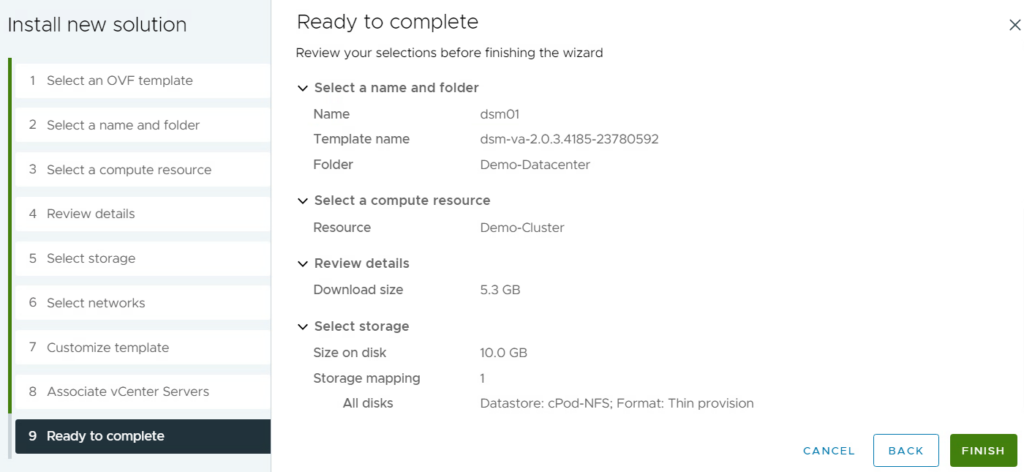

This section walks through a normal OVA import process you can follow from the screenshots below.

Select the OVA file to upload

Define a name for the VM and select the folder for the import

Select the compute resource / cluster in this step and make sure you don’t check the hook for “Automatically power on deployed VM”. If you’d do that, the process will fail as it anyway will try to start the VM. It will then notice that the VM is already running and will break.

Select the target storage

Select the target network

Specify the required parameters with following guidance:

- Make sure you specify the vCenter server as FQDN which resolves by DNS. Although IP should work as well, in my case I wasn’t able to install the plugin afterwards if done so.

- Only specify 1 DNS server as it requires that multiple entries are separated by SPACE where the UI doesn’t allow to type a space character. Adding servers by a comma-separation will lead into an issue afterwards. This is already a known issue and will be fixed in the next release.

Finally select the vCenter to integrate the plugin, review the summary and start the import process.

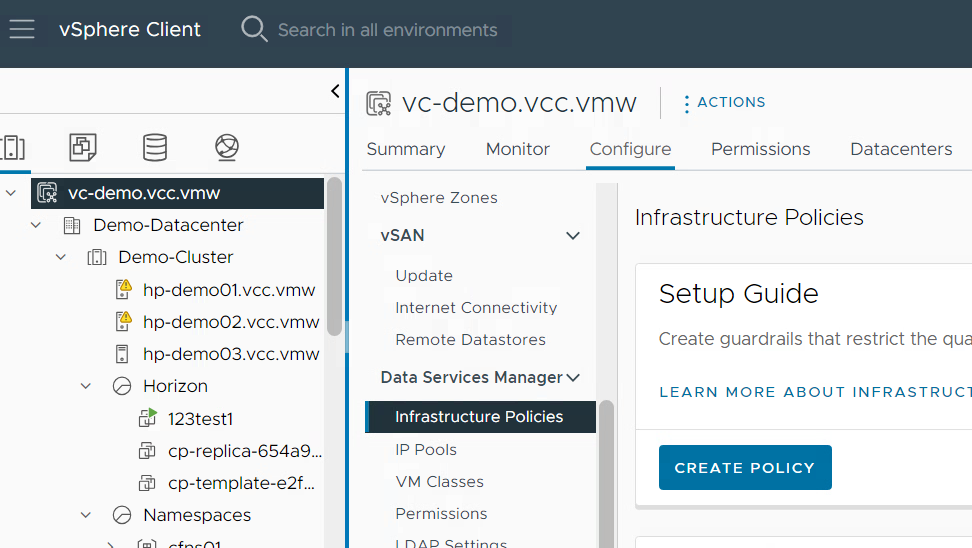

Configuring DSM in vCenter

The basic configuration of DSM is done on the vCenter level –> configuration tab. You’ll find all the following dialogs in the “Data Services Manager” subsection.

If you face an issue after the VM deployment e.g. that the plugin is not available, there is a script on the appliance that might help you for troubleshooting:

python3 /opt/vmware/tdm-provider/check-install/check_install.py

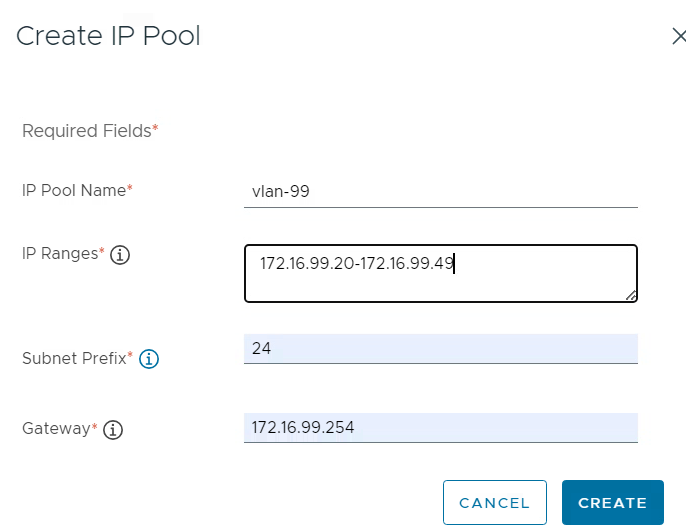

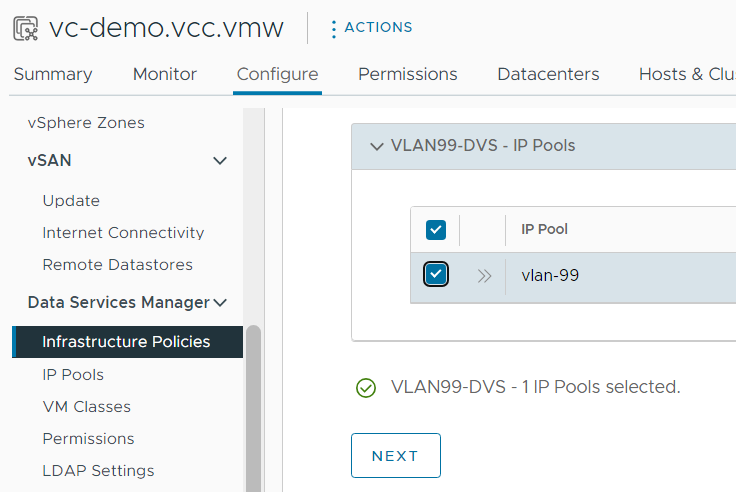

As DSM deploys VMs that host database services, an IP for the assignment is required. This is handled by IP Pools which provide a range of valid IPs to be used for the DB deployment.

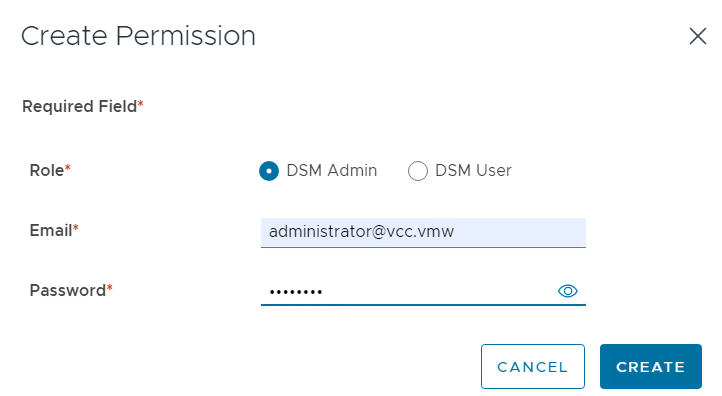

In addition, you’ll need users that can access the DSM web interface. Those users can be local users or they can come from an LDAP source. In this example I created a local administrative user.

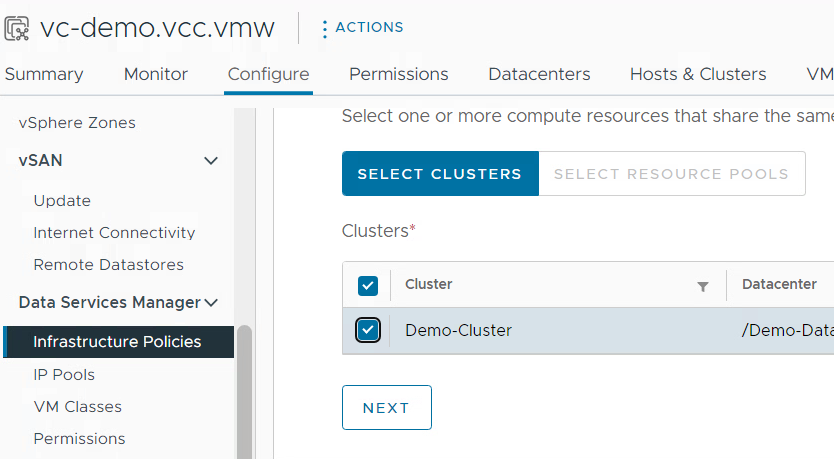

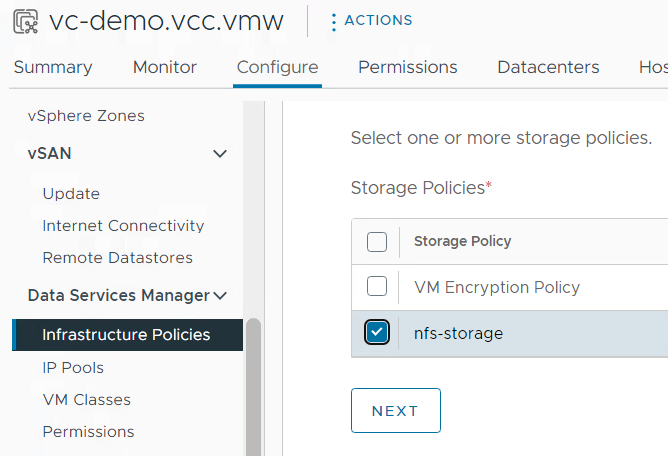

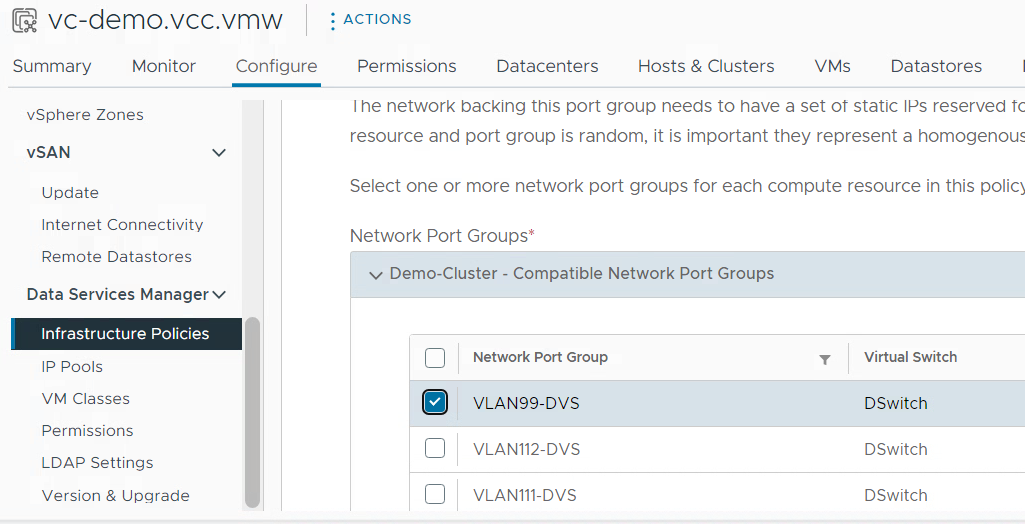

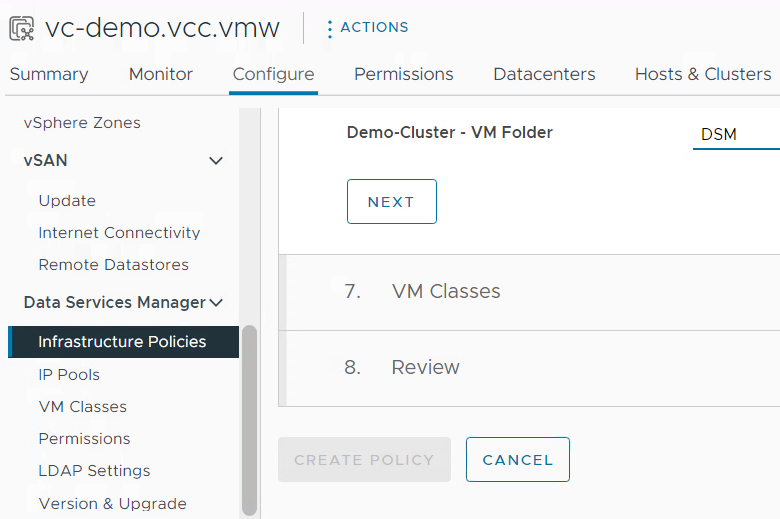

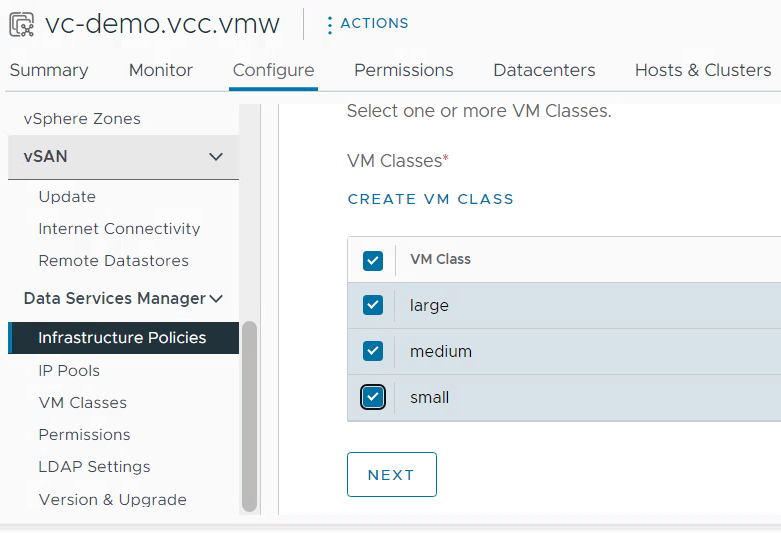

Finally, you must create an infrastructure policy. The policy defines which cluster, storage and networks to use. It also assigns the IP pool appropriately and specifies a vCenter folder as well as VM classes for the deployment of the VMs. On provisioning the users can select which policy to use.

After finalizing those steps the preparation of the S3 storage comes next.

Preparation of S3 storage

For DSM Broadcom provides the images of the database instances. Those are available in the download portal for the respective DSM version as “Air-gap Environment Repository”.

To enable DSM using them they must be stored on a S3 based storage. It can either be Amazon S3 or any other S3 compatible storage. I would recommend not using AWS S3 for the moment due to following reasons:

- In my tests S3 worked with us-east-1 region only

- The exact IAM requirements on AWS are not documented yet

- The deployment time using S3 is usually longer which in my case broke the Aria Automation integration due to a hard-coded 15 minutes timeout.

I decided to use Minio as on-prem S3 repository. There are multiple documentations available in the web on how to set it up. One that mostly worked for me is available here. Be aware that DSM requires a TLS connection to S3 which means you must make sure that the certificate matches the DNS name. I’d recommend using DNS name to access the S3 repository and creating an appropriate certificate. The main steps are described in the documentation linked above. I was struggling with the folder permissions of the path to the cert file. Make sure that all levels have read access for the minio-user. If everything has been configured correctly, the S3 API should be available on port 9000:

https://<minion-server>:9000

The minio web ui uses Port 9001 by default.

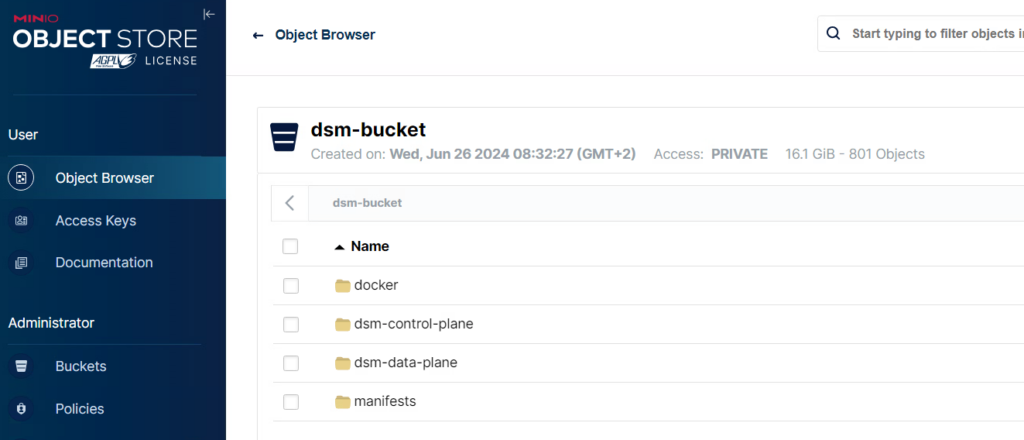

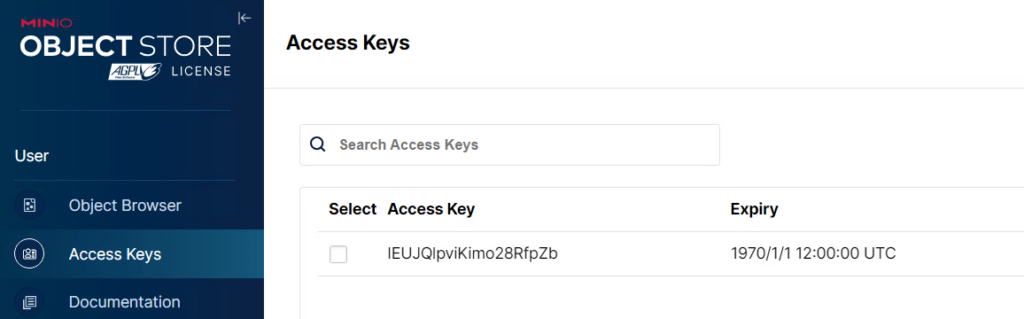

If the S3 server has been setup correctly, create a bucket on it and upload the contents of the extracted air_gap_deliverable folder to it. Once done you only need to create an access key and secret which must be specified in the DSM interface.

This is how the bucket shall look like:

Access keys can be created here:

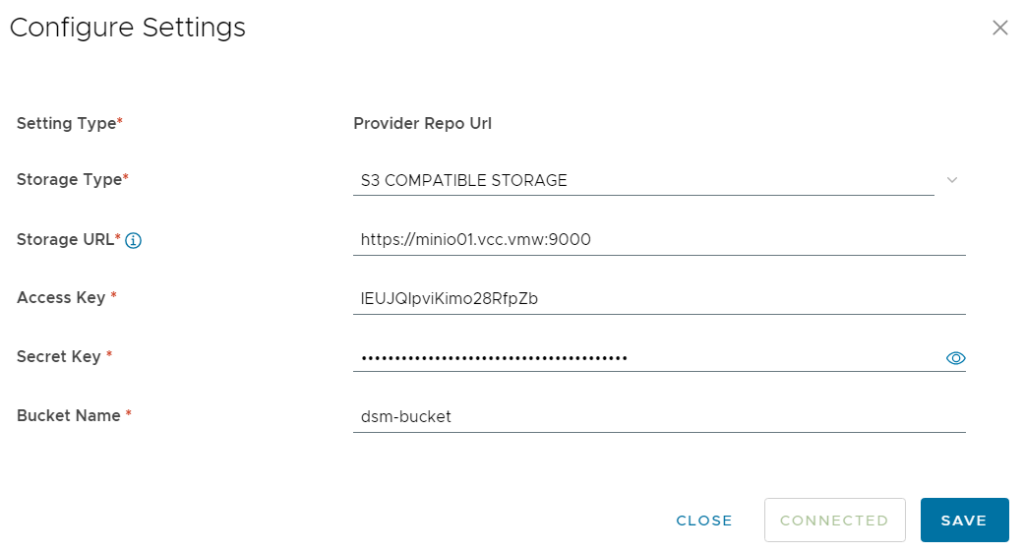

DSM connection to S3 storage

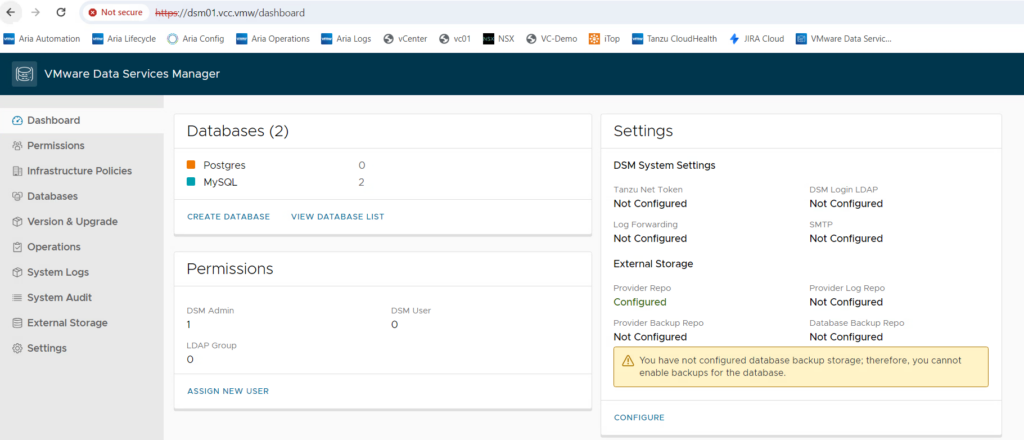

After all the configuration, the DSM UI should be accessible directly through the browser.

The configuration of repos is still required (in this screenshot, the provider repo is already configured). To do the configuration move to the settings menu and storage settings tab.

Note: You cannot remove or change the provider repo configuration once it has been successfully configured. So, a change from e.g. AWS S3 to a local S3 is not possible. This relates to version 2.0.3 and might be adapted in upcoming releases.

For the repo configuration you need the details of your S3 storage as shown in below screen. You could use the same S3 storage for all 3: provider, backup and log repo.

DSM Database Activation and Test

Before the deployment of databases will work, one or more database versions must be enabled. Currently MySQL and PostgreSQL are supported.

You can do the activation in the “Version & Upgrade” menu. Be aware that this process might take about 20 minutes per database type. For this blog and demo I activated the MySQL database in my lab.

Integration of DSM and Aria Automation

If all has been configured properly, you can test creation of a database through the “Databases” menu.

The primary purpose of my blog is demonstrating the integration of database services in the Aria Automation Catalog. The download page of Data Service Manager provides a script that automatically configures this integration. You find it in the download section as “AriaAutomation_DataServicesManager”.

There is a readme.md file which explains how to set up the solution. In a nutshell I’d recommend following steps to do:

- Use a linux system and copy the files to it

- Make sure Python 3.10 or higher is installed

- Use Aria Automation 8.14 or higher

- Run ‘pip3 install -r requirements.txt’ to make sure all dependent modules are installed

- Edit the config.json file as per description in readme.md

Example of config.json

{

"dsm_hostname": "172.16.111.24",

"dsm_user_id": "administrator@vcc.vmw",

"dsm_password": "<password>",

"aria_base_url": "https://vra.demo.local",

"org_id": "084f2d85-99a1-4fd0-bf08-927ea57fd2dd",

"blue_print_name": "DSM DBaaS",

"abx_action_name": "DSM-DB-crud",

"cr_name": "DSMDB",

"cr_type_name": "Custom.DSMDB",

"aria_username": "configurationadmin",

"aria_password": "<password>",

"env_name": "DSM_ENV",

"project_name": "demo-project",

"skip_certificate_check": "True",

"dsm_root_ca": "XXXXXXX"

}

Make sure that the aria.py script has the proper permissions (e.g. chmod 755 aria.py) and run it with this command:

python310 aria.py If you are using Aria Automation 8.16.2 or higher, it includes support for the cloud template format version 2. This has enhancements e.g. to provide an overview page after deployment. To enable this functionality, use this command:

python310 aria.py enable-blueprint-version-2Aria Automation UI

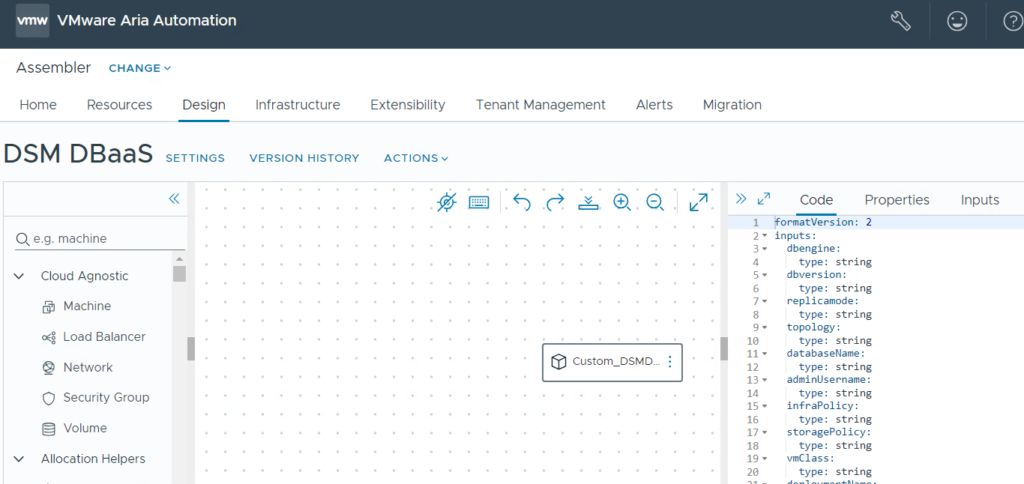

On successful configuration you will find several changes on Aria Automation side. First, a new cloud template has been created with the name as specified in the config.json. This leverages a new custom resource based on an ABX action.

In DSM 2.0.3 there is one issue in the aria.py configuration file which might prevent a successful run. If you see an error about “UpdateContentSource” , you must modify line 47 of the aria.py script and change it to

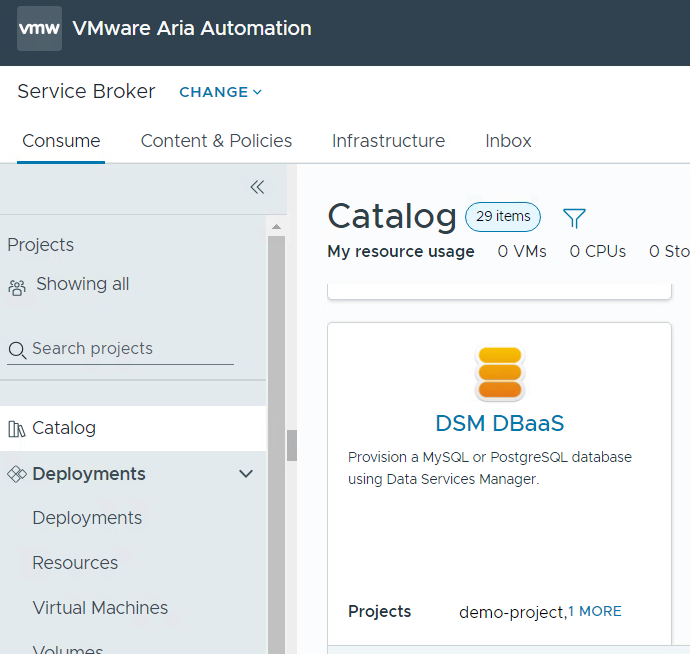

On your catalog you will see a new service “DSM DBaaS”. The script automatically configures the service release and its content source settings. It might be that an existing content source has been renamed which you can easily revert. In my demo environment I also added a service icon and included a service description which were not set after running the script.

Before you run the DB request through Aria Automation, I highly recommend testing it in DSM directly. Although the Aria Automation integration works fine, it’s not 100% robust yet. If for example your database installation fails on DSM because the IP range ran out of addresses, Aria Automation will run into an error. At the same time the DSM deployment will hang. Removing the deployment in Aria Automation will delete it there but won’t delete the DB config in DSM. In addition, there is no CRUD action possible in DSM on an Aria managed database which prevents you from removing it (see some hints how to do it though in the troubleshooting section below).

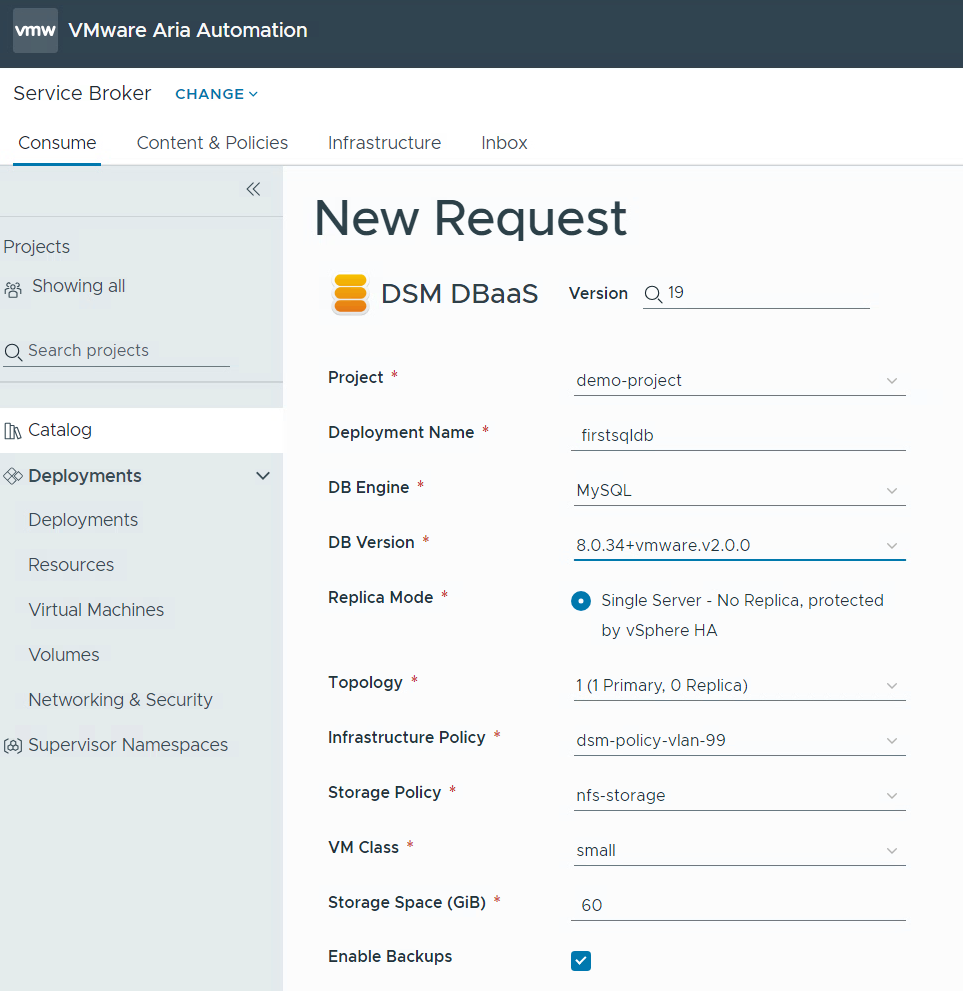

If your test on DSM was successful, you can request a database from Aria Automation. Make sure you only select the database type (e.g. MySQL) which you enabled on DSM before as it will not do any validation on that.

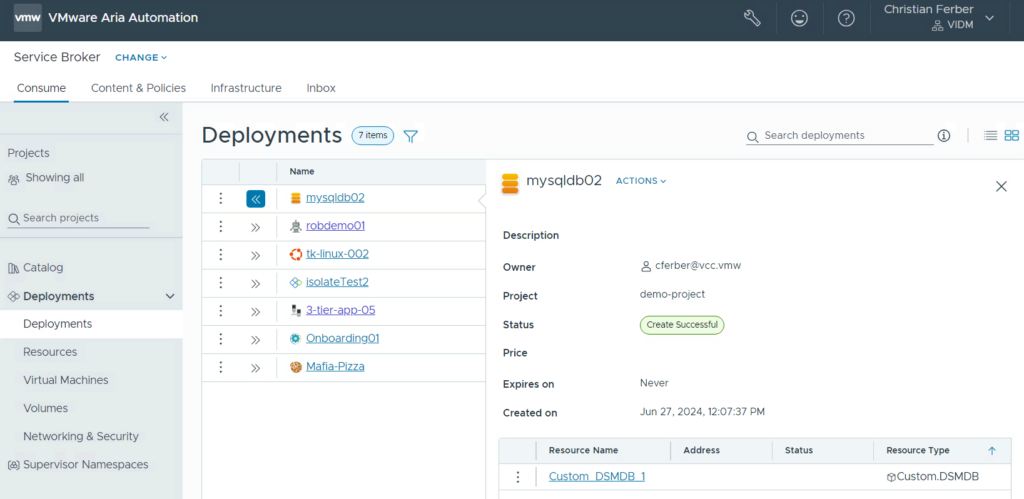

After successful deployment you will see the deployment in the Service Broker interface.

If you enabled blueprint-version-2 you will see the overview page when going into the deployment details. It includes a deeper description on how to access the deployed database.

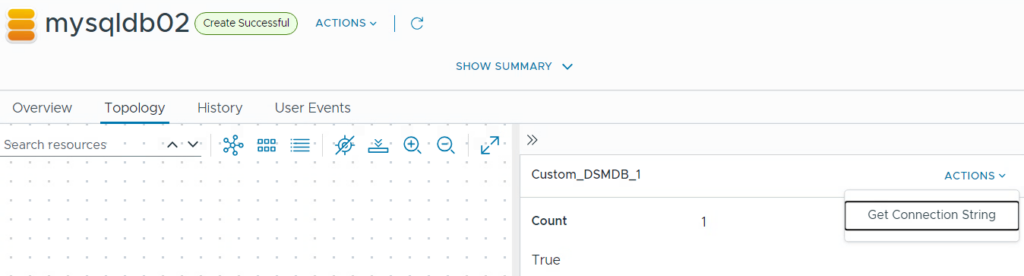

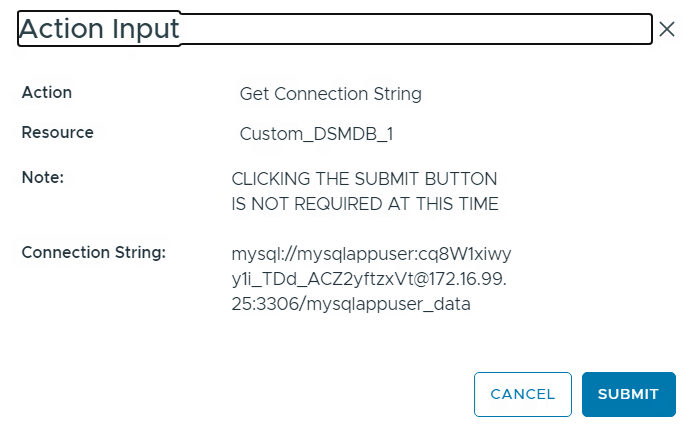

To access the DB there is a day-2 action included which provides the connection string.

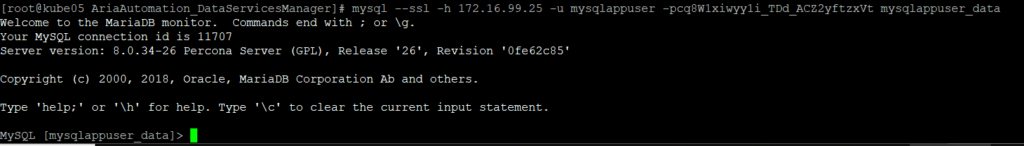

There are multiple ways to access the MySQL database. For an easy check install the mysql client on a system and execute the command like this:

mysql --ssl -h 172.16.99.25 -u mysqlappuser -pcq8W1xiwyy1i_TDd_ACZ2yftzxVt mysqlappuser_data

Troubleshooting

Appliance deployment issues

As mentioned in one of the previous sections there is a script on the appliance that will help you troubleshooting configuration issues after deployment of the appliance:

python3 /opt/vmware/tdm-provider/check-install/check_install.pyStuck database deployments

In some cases, there could be stuck database deployments that can’t be removed by the UI. This could happen if a provisioning failed that has been invoked by Aria Automation.

The deployments are internally managed by Kubernetes resources which can be seen by CLI.

Use these commands to force delete of a deployment:

ssh into DSM

cd /opt/vmware/tdm-provider/moneta-gateway

export KUBECONFIG=kubeconfig-gateway.yaml

kubectl get mysqlcluster -A

kubectl delete mysqlcluster <yourcluster>

Have fun!

- 1-node Kubernetes Template for CentOS Stream 9 in VCF Automation - 30. September 2024

- Aria Automation custom resources with dynamic types - 9. August 2024

- Database-as-a-Service with Data Services Manager and Aria Automation - 4. July 2024