Beforehand I would like to express my thanks to Ismail Yilmaz and Christian Liebner for their help in developing modules and verification of the solution.

vRealize Automation 8.x and vRealize Automation Cloud do have integration with gitlab and github for source code management. As of today, this is only a one-way process to pull blueprints from the SCM system into vRA – not vice versa. Many customers want to establish a full code development process from the creation of blueprints down to their usage in test and production systems. vRA has the proper interfaces to do that. The general idea is that if somebody creates a new version of the blueprint in Cloud Assembly and releases it to the catalog, the code is automatically pushed to a github repository.

Example process:

New blueprint version created and released à EBS subscription is triggered à vRO workflow is started -> vRO workflow starts Codestream pipeline (that does the actual job)

Customers might decide to build up an isolated vRA instance or tenant just for the purpose of developing blueprints. The released blueprints are synced to a git repository where 1 or multiple separate vRA instances sync their blueprints from for test or production consumption.

This blog will explain a method to implement a blueprint push model using Codestream, vRealize Orchestrator and Event Broker framework. It’s based on vRA 8.2 but could be used for other versions and vRA Cloud as well (potentially slight adaptions required).

Look at this video to see how the final implementation works.

Technical Requirements

- github.com repository

- Linux host to execute SSH commands

- vRA 8.2 Installation with Enterprise license for Codestream

Preparation of github repository

All code is stored in a github.com repository. I am not walking through the process to create one in this blog. However, there is one specific configuration required. As usually you don’t want to hard-code credentials into pipelines or workflows, you want to use a ssh key to push code to github.

Create ssh key on Linux host and make sure ssh agent is running properly:

ssh-keygen -t rsa -b 4096 (use defaults)

eval “$(ssh-agent -s)”

ssh-add ~/.ssh/id_rsa

ssh-keyscan github.com >> ~/.ssh/known_hosts

Copy public ssh key

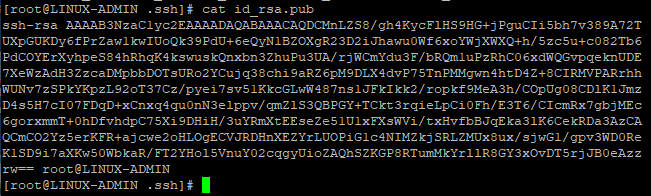

cat ~/.ssh/id_rsa.pub

Copy the output of above command and go to your github repository settings

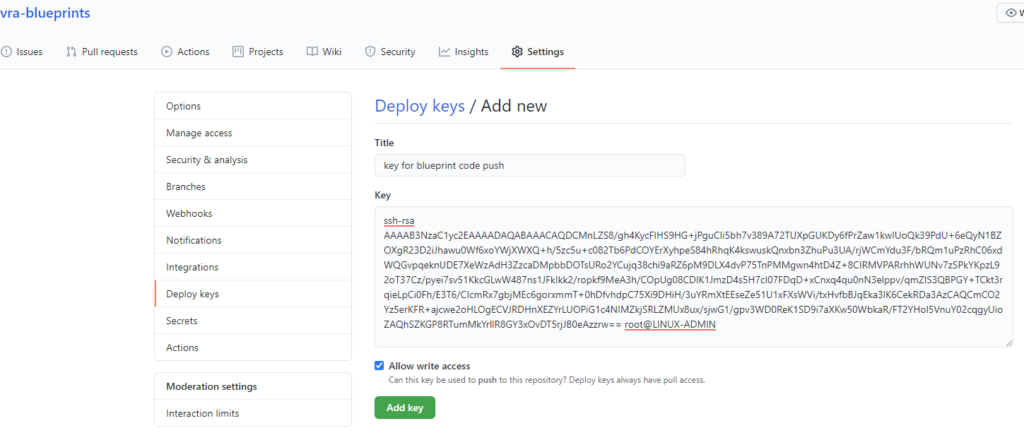

github repository à settings à deploy keys and add the output from previous task incl. title.

Make sure you check the “Allow write access” hook and add the key.

Preparation of the Linux host

The Linux host is used for temporary execution of scripts. In a nutshell we are cloning the github repository, add blueprint modifications and push it back to github.

To execute tasks, a Linux host is only one option. You could also use a Docker container or Kubernetes environment as dynamic workspace. There’s no specific reason (other than that it was already available) why a Linux host is used in the current example.

Following preparations are required on the Linux host:

- ssh enabled for user/password

- git installed (yum -y install git for CentOS)

- jq installed (yum -y install jq for CentOS)

Import Codestream pipeline

Download the Codestream pipeline export.

Before importing you must modify the yaml file and replace !!CUSTOM!! with the project name from your environment you want to import the pipeline into.

After import, you must modify the input values based on your environment parameters. The descriptions are self-explaining, see example here:

If you don’t want to show passwords in clear text you could use Codestream variables that support secrets. This will require slight modifications in Codestream code. For ease of use I didn’t do this task in the demo. Also, the related Orchestrator workflow could store credentials in secrets format and pass them through.

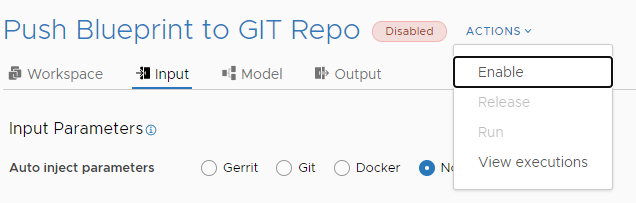

Save and enable the pipeline

Preparation of vRealize Orchestrator

Create REST Host

Run “Add a REST host” workflow.

Specify those parameters:

- Name: <custom name of rest host entity>

- URL: <URL of vRA host like https://server.local >

- Host Authentication/Host’s authentication type: Basic

- User credentials/Authentication username: <vRA username>

- User credentials/Authentication password: <vRA user password>

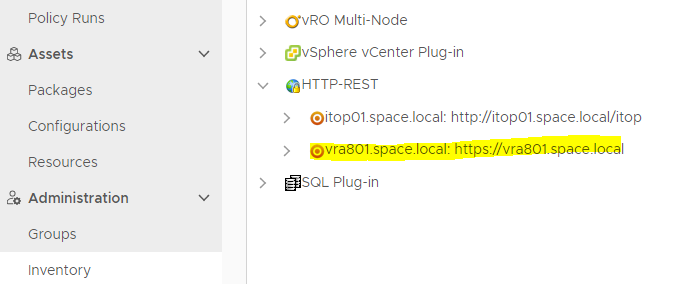

This should successfully add a REST host into the vRO Inventory:

Import vRealize Orchestrator package

Download the vRealize Orchestrator package.

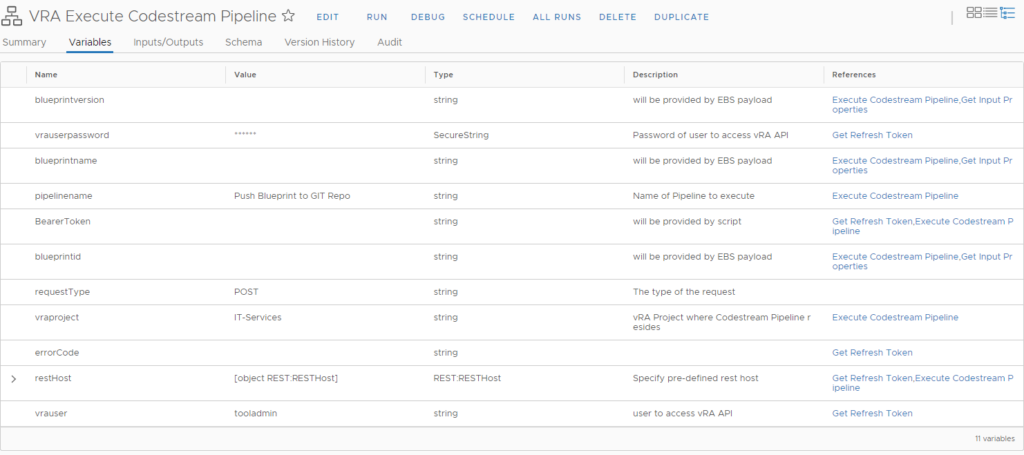

Import the package into vRealize Orchestrator. This will import a workflow called “VRA Execute Codestream Pipeline” in VMware custom/vRA tree.

Modify the variables of the workflow based on your environment parameters. All variables with description “will be provided by …” should stay empty.

Create vRA Subscription

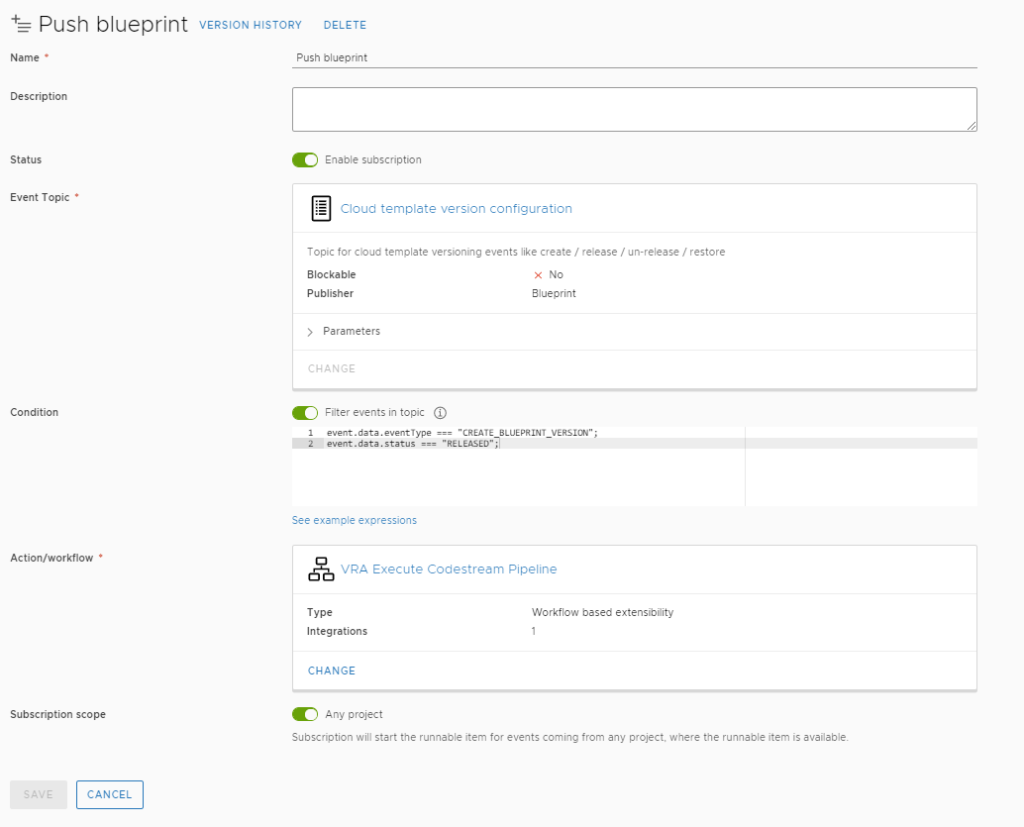

The Orchestrator workflow from previous section will be invoked by a Cloud Assembly subscription, which will react on the event of creating a new version and releasing it.

Find here the details for the subscription parameters required:

The above-mentioned subscription configuration will run for each blueprint. To limit it for execution on specific blueprint or to specific projects additional conditions or scope limitations need to be applied.

Try the solution

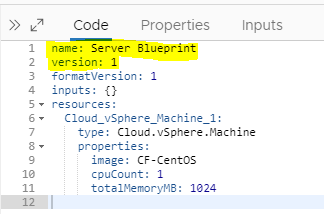

To try the solution a blueprint must be created in Cloud Assembly. For proper sync back to vRA it’s required that a name and version parameter (first 2 lines in below example) is added to the blueprint yaml.

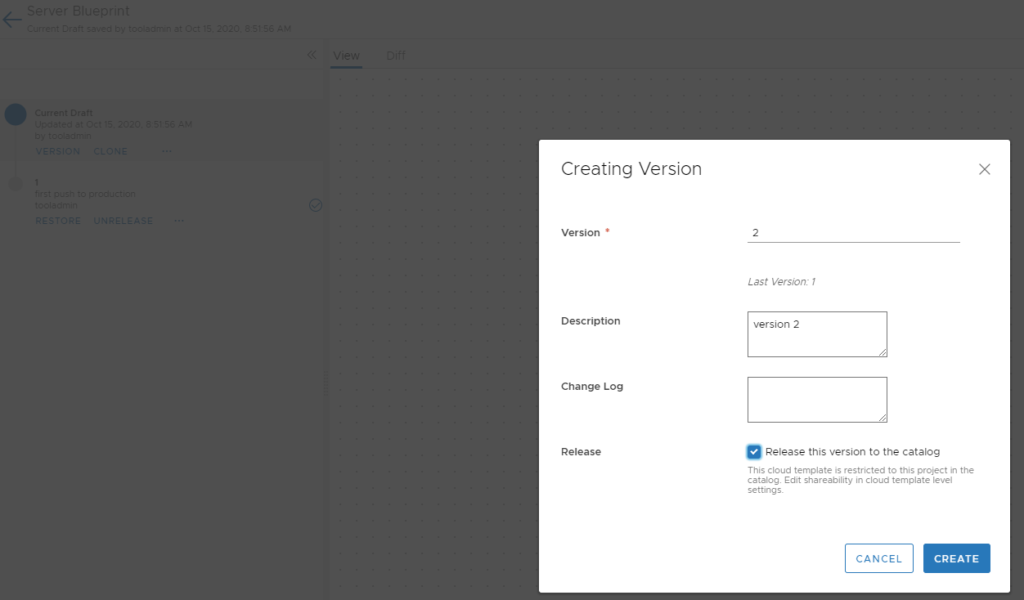

As soon as all content is added to the blueprint, the next step is to create a new version and “Release this version to the catalog”.

Subsequently an event is triggered automatically and the blueprint.yaml file is pushed to the git repository.

- 1-node Kubernetes Template for CentOS Stream 9 in VCF Automation - 30. September 2024

- Aria Automation custom resources with dynamic types - 9. August 2024

- Database-as-a-Service with Data Services Manager and Aria Automation - 4. July 2024