After integrating NSX-T with K8S I sometimes get issues with coredns not working.

Common root cause: K8S internal DNS infrastructure needs non-NAT’ed network access from container PODs to K8S Nodes and vice versa. As NSX-T NCP default behaviour is to NAT your K8S Namespaces this can – depending on you overall architecture – cause connection issues.

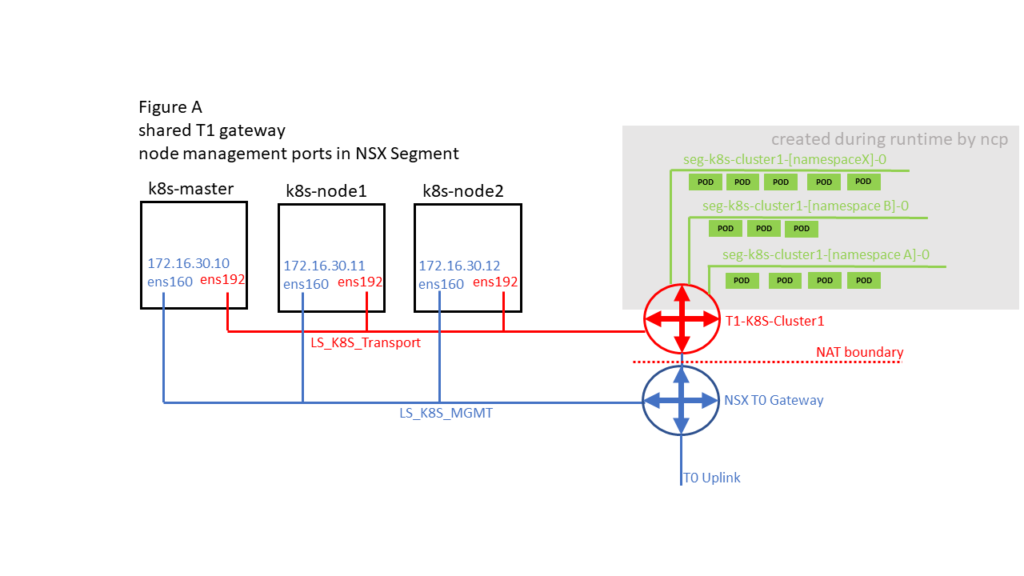

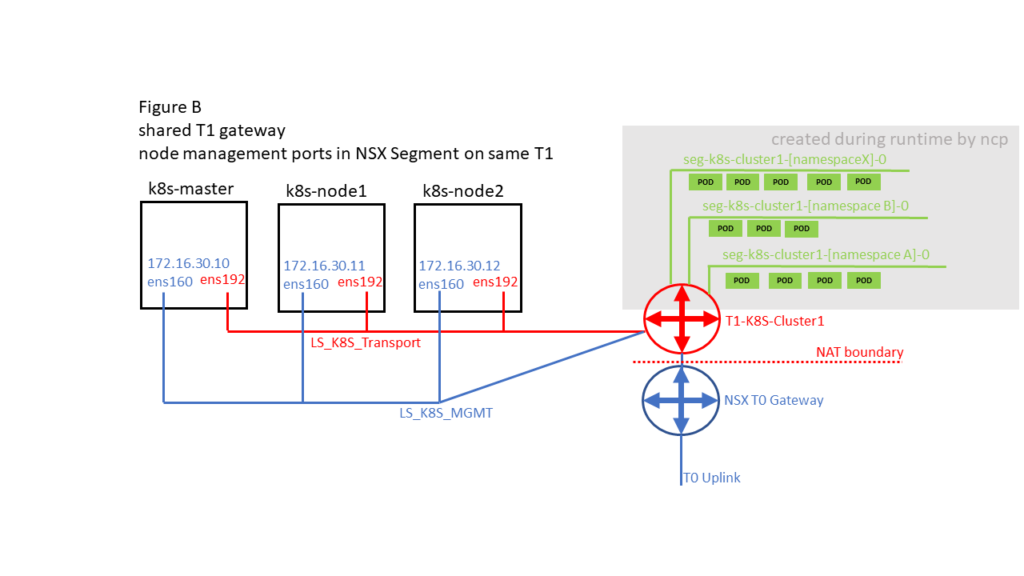

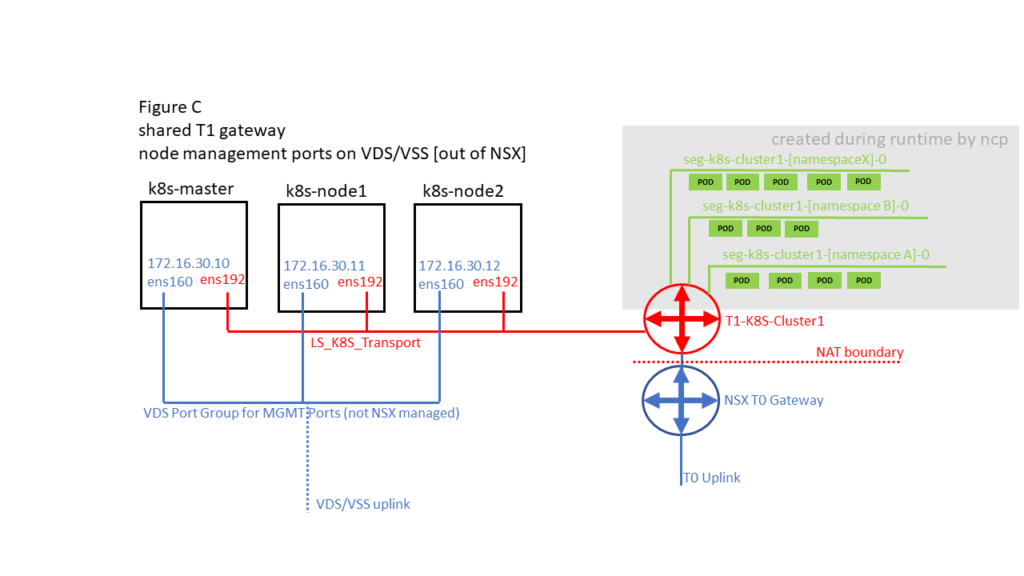

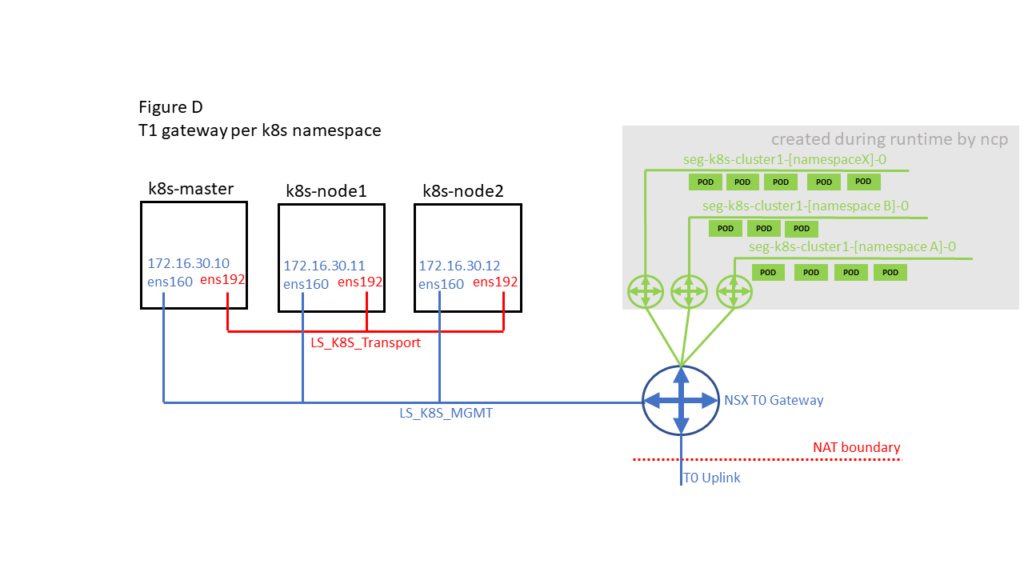

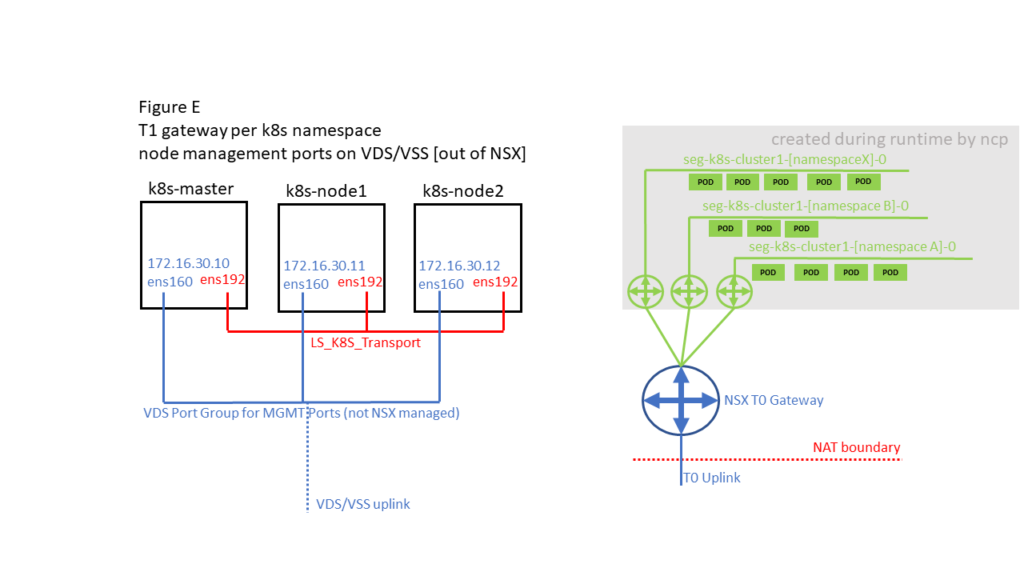

In general the issue described here only occurs when your K8S Overlay networks are connected to a different Distributed Router as the management Ports of the K8S Nodes [see 2.4].

1. Short Version

Ensure no Distributed Firewall rule is blocking DNS traffic 😉

- try to ping your K8S Nodes (management port) out of a container pod

- try to ping your K8S container pod from your K8S Nodes

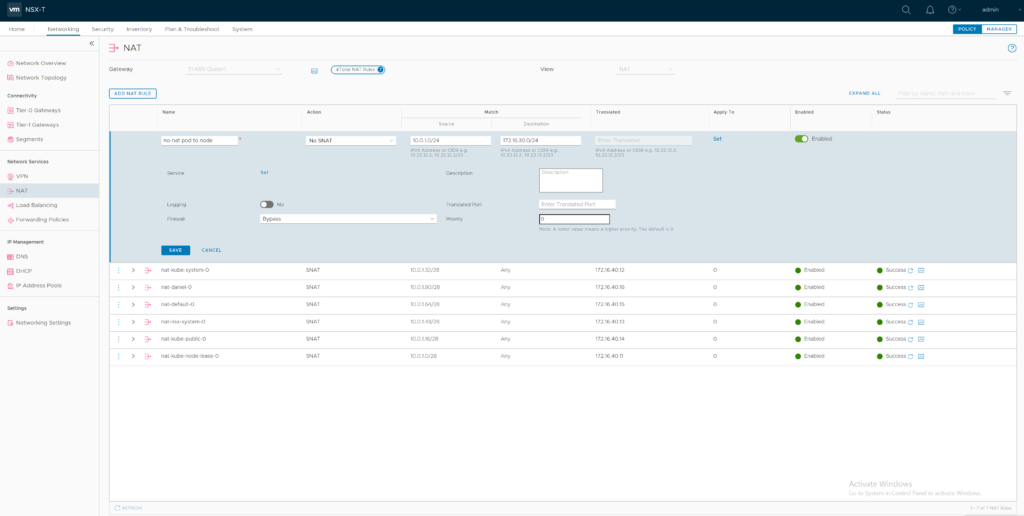

Both ways must work. If not, identify the Gateway doing NAT for your K8S environment and implement a NO-SNAT rule between your Container- Network (Source) and your K8S Node Management network (Destination) prior to all other SNAT rules.

2. Detailed analysis

2.1 Issue

for troubleshooting you can apply the dnsutils pod

vm@k8s-master:~$ kubectl apply -f https://k8s.io/examples/admin/dns/dnsutils.yaml

pod/dnsutils created

and check if its running:

vm@k8s-master:~$ kubectl get pods dnsutils

NAME READY STATUS RESTARTS AGE

dnsutils 1/1 Running 0 [time]

verify internal name resolution fails

vm@k8s-master:~$ kubectl exec -i -t dnsutils -- nslookup kubernetes.default

;; connection timed out; no servers could be reached

2.2 coredns Deployment / kube-dns Service

kube-dns service exists

vm@k8s-master:~$ kubectl get service -nkube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP,9153/TCP [time]

but no endpoints are shown

vm@k8s-master:~$ kubectl get endpoints kube-dns -nkube-system

NAME ENDPOINTS AGE

kube-dns [time]

coredns PODs are running, but not ready

vm@k8s-master:~$ kubectl get pods -nkube-system -o wide -lk8s-app=kube-dns

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-5d999964c5-bpnbj 0/1 Running 0 [time] 10.0.1.34 k8s-master

coredns-5d999964c5-kwjbg 0/1 Running 0 [time] 10.0.1.37 k8s-node1

ping to K8S Node Management IPs from dnsutils pod will fail

vm@k8s-master:~$ kubectl exec -i -t dnsutils -- ping 172.16.30.11 -c4

PING 172.16.30.11 (172.16.30.11): 56 data bytes

--- 172.16.30.11 ping statistics ---

4 packets transmitted, 0 packets received, 100% packet loss

command terminated with exit code 1

2.3 Check NSX-T Distributed Firewall DFW

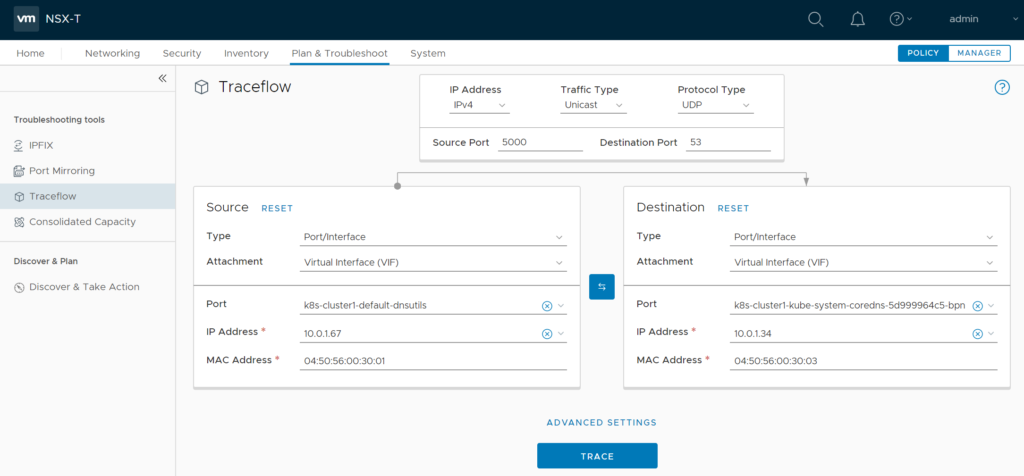

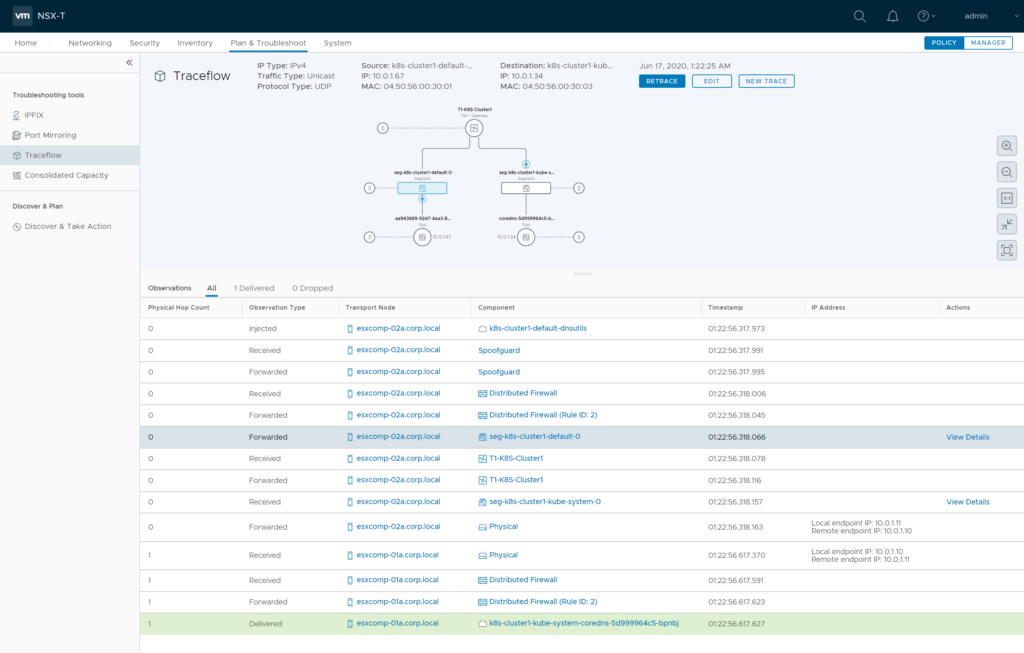

Use NSX-T Traceflow to check if DFW might block DNS traffic from your PODs to coredns PODs. Access to UDP/TCP port 53 to all coredns PODs should work. As the previous ping also failed you should ensure its not getting blocked by DFW…

2.4 K8S / NSX-T deployment options

There are different options on how K8S Pods / Namespaces and K8S Node Management ports can be connected. This impacts where NAT of traffic coming from Container PODs is done. Whenever there is a NAT boundary between Container PODs and K8S Node Management ports this DNS issue could happen. So Figure B and Figure D should not be affected.

2.5 Resolution

Identify the Gateway responsible for NAT of you K8S environment and create a NO-SNAT rule between your Container – Network (Source) and your K8S Node Management network (Destination) prior to all other SNAT rules. In my K8S / NSX-T Post Container Network was refered as K8S-Container-Network

restart coredns deployment

vm@k8s-master:~$ kubectl rollout restart deployment coredns -nkube-system

deployment.apps/coredns restarted

coredns pods should come up and be ready

vm@k8s-master:~$ kubectl get pods -nkube-system -o wide -lk8s-app=kube-dns

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-78d4fb7fff-99h8c 1/1 Running 0 98s 10.0.1.35 k8s-node2

coredns-78d4fb7fff-v6n5r 1/1 Running 0 98s 10.0.1.38 k8s-node1

kube-dns endpoints should exist now

vm@k8s-master:~$ kubectl get endpoints kube-dns -nkube-system

NAME ENDPOINTS AGE

kube-dns 10.0.1.35:53,10.0.1.38:53,10.0.1.35:9153 + 3 more… [time]

K8S internal DNS should work now. Forward:

vm@k8s-master:~$ kubectl exec -i -t dnsutils -- nslookup kubernetes.default

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1

and reverse:

vm@k8s-master:~$ kubectl exec -i -t dnsutils -- nslookup 10.96.0.10

10.0.96.10.in-addr.arpa name = kube-dns.kube-system.svc.cluster.local.

Pingback: Integrating Kubernetes with NSX-T 3.0 » vrealize.it - TechBlog VMware SDDC