Since vRealize Automation 8.2 the product includes blueprints that can leverage Terraform services. The implementation leverages a Kubernetes cluster where it creates a temporary pod which loads some data from the internet. For some customers it’s mandatory to have no internet connection in the datacenter. Hence a solution is required that can run in a fully air-gapped environment.

The documentation on how to set this up is hosted here:

This blog adds several details to it.

Special thanks to Harald Ferch whose work was the base for this article.

Note: This does not apply to vRA Cloud as by definition it’s not air gapped. Also, for vRA Cloud the Kubernetes cluster is VMware managed and can’t be modified or moved to on-prem for now.

Create git repository structure

To use the Terraform service a git repository is required. In an air-gapped environment this will typically be an on-prem installation of gitlab or github enterprise. In this example gitlab is used.

On the git repository the Terraform files are stored. In my case I created a repository “Terraform”.

Next create a sub-folder is created that contains Terraform files like main.tf.

I will not go into details on how to create a Terraform file with correct syntax. In this example I used a simple Terraform file which just creates a namespace on a kubernetes cluster.

Beyond the Terraform file (main.tf) it requires the kubernetes config file in the same directory.

Example Terraform file for creating a Kubernetes namespace:

provider "kubernetes" {

config_path = "config"

}

resource "kubernetes_namespace" "example" {

metadata {

name = "my-first-namespace"

}

}

If a Terraform provider is used in the Terraform file, the provider file must be made available offline as otherwise it tries loading it from the internet.

Following file structure is needed:

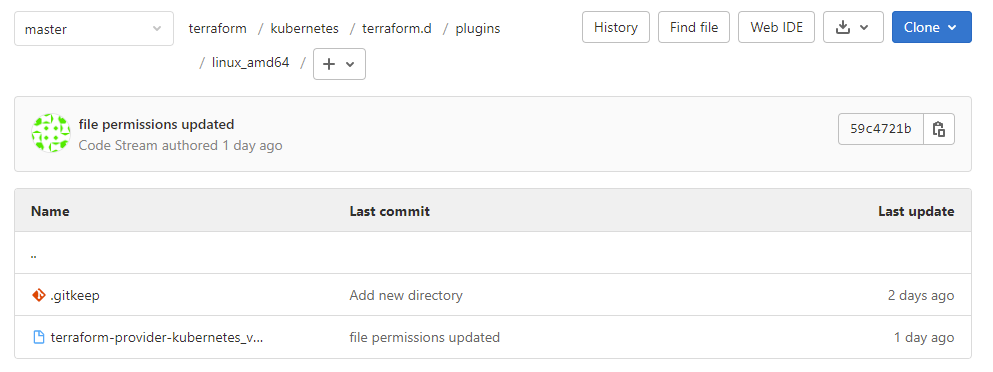

Terraform / Kubernetes / Terraform.d / plugins / linux_amd64

The related Terraform provider can be downloaded from releases.hashicorp.com

Next the unzipped provider is store in above directory.

If the provider file is too large for a web-based upload you can use the Web IDE to upload it (see button in screenshot). Alternatively, you can clone the repository to a client, copy provider to it and upload it by push command. Make sure that the provider file has execution permissions prior to the upload!

Configuring Terraform integration in vRA

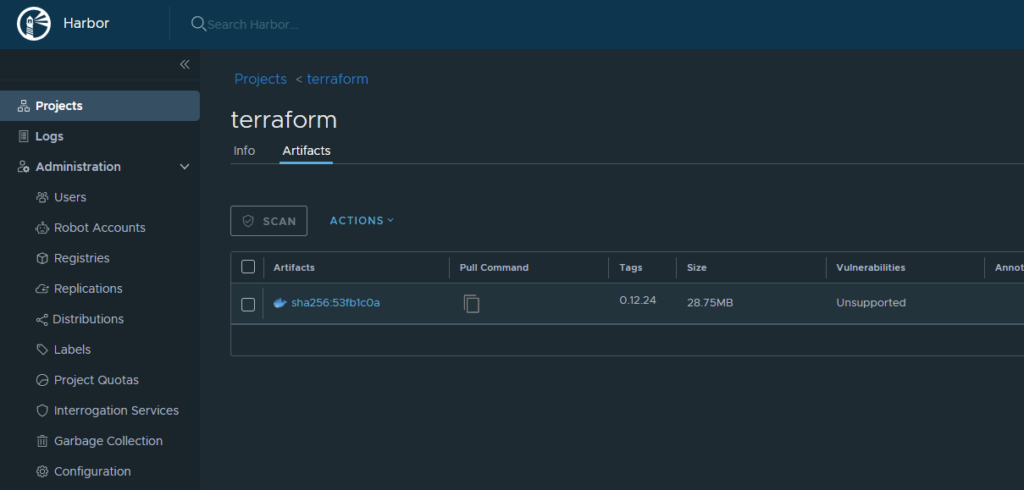

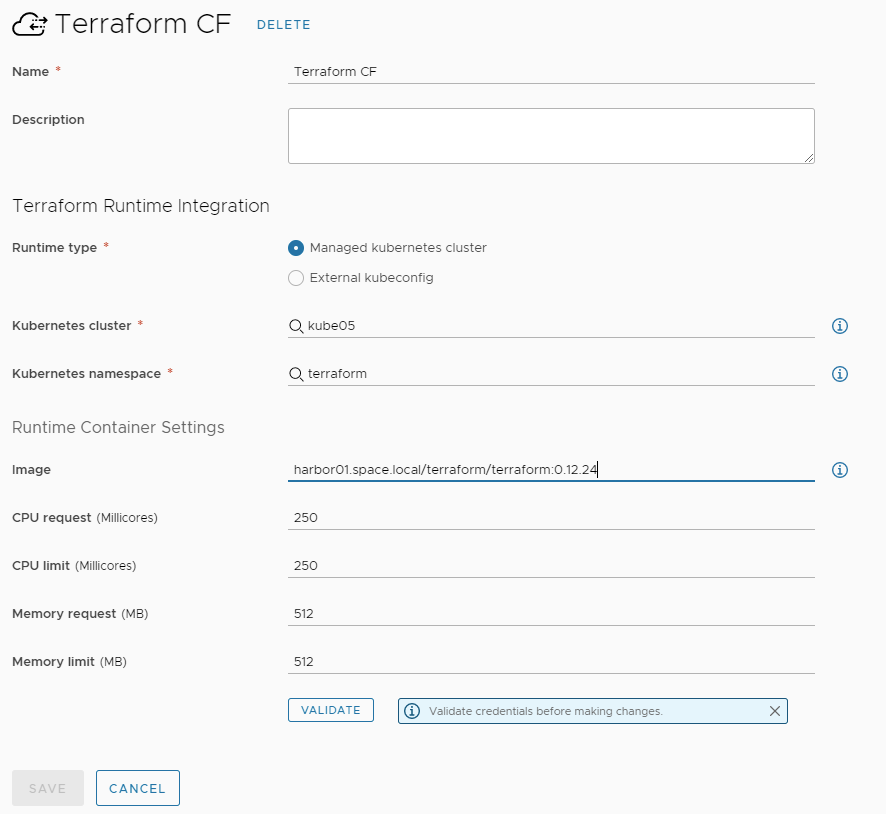

The vRA Terraform integration requires a Kubernetes cluster to run temporary containers. The required container image by default is downloaded from docker.io. In an air-gapped environment you want to store the image locally. You can use any docker on-prem registry you like. VMware harbor can be one option. If harbor registry is used, following steps are required:

- Make sure that the CA root certificate for the harbor server is stored on the docker and Kubernetes hosts

- Download the Terraform docker image from docker.io/hashicorp/Terraform:0.12.24 (you could use a later version)

- Upload the image to harbor into a project that has public access permissions

When creating the Terraform integration in vRA make sure you specify the image pointing to the local registry in the integration configuration.

Configure Terraform version

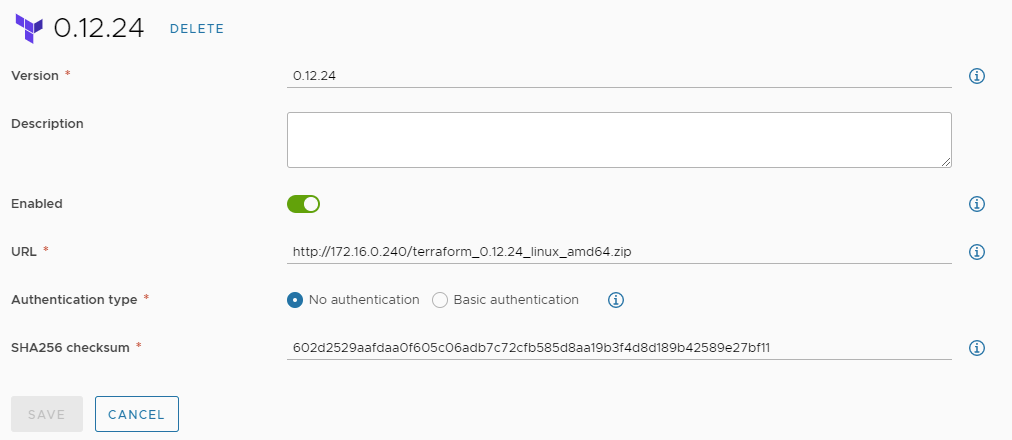

The temporary Kubernetes container must have access to the Terraform version file required. This is configured under “Terraform Versions” in vRA.

Note: The air-gapped configuration explained here uses Terraform 0.12.24. For Terraform 0.14.x there might be adaptions required as the folder structure to store provider files has changed.

Download the required Terraform version file from https://releases.hashicorp.com/Terraform/ and store it on a local web server (in zipped format). You can use any web server that is available. It would also be possible to store the file on your local git repository. Just make sure to install the CA root certificate on the vRA appliances if HTTPS connection is used.

Find below an example Terraform version entry in vRA:

Once all configuration is done, you can use the vRA Cloud Template wizard to create your Terraform Cloud Template and start a deployment.

Troubleshooting Terraform execution

During execution of a Terraform blueprint issues might come up. VRA starts a temporary pod on the configured Kubernetes environment. This pod however is removed quickly after execution. To make sure the pod stays for further troubleshooting, following debug options can be set:

Connect to vRA appliance per SSH/root and execute:

kubectl -n prelude edit deployment tango-blueprint-service-app

Search for JAVA_OPTS in the file by using /JAVA_OPTS

Add below line in this section and save the file:

-Dterraform.task.skipDeleteJob=true

After that wait 2-3 minutes as the pod is restarting.

As a result, on new Terraform Cloud Template deployments the resulting pods on the Kubernetes Cluster will stay.

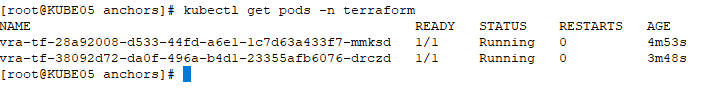

List the running pods on Kubernetes cluster

Access pod by this command:

kubectl exec --stdin --tty vra-tf-28a92008-d533-44fd-a6e1-1c7d63a433f7-mmksd -n Terraform -- /bin/sh

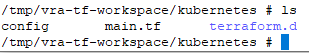

You should find a copy of the git content in /tmp/vra-tf-workspace directory

To delete the pods use kubectl delete jobs <job> -n Terraform and kubectl delete pods <pod> -n Terraform commands.

- 1-node Kubernetes Template for CentOS Stream 9 in VCF Automation - 30. September 2024

- Aria Automation custom resources with dynamic types - 9. August 2024

- Database-as-a-Service with Data Services Manager and Aria Automation - 4. July 2024

Hi Christian,

Thank you for this amazing resource – it’s tough to find things regarding air gapped – I have been stuck on one issue however.

When attempting to deploy the Terraform integration Cloud Assembly, it hangs on PLAN_IN_PROGRESS.

Have you experienced this?

– I am using Terraform v0.21.29 (after downgrading from 1.0.9)

– I am using Jfrog container registry (which I pulled the latest & 0.12.9 versions from docker.io; projects.registry.vmware.com; compiling my own with the vSphere provider bundled)

– I am leveraging the vRealize Kube cluster with successful validation

– I have tried with & without the provider in the terraform.d/… directory

– Inputs, provider and outputs successfully show up before deployment

– I’m hoping that my Cloud Zone / Cloud Accounts etc are correct as I have deployed normally through vRA plenty of times

Please let me know if this issue seems familiar 🙂

Thank you